Final Showdown of Machine Learning Algorithms

CNN, Mobile-Net, KNN, Random Forest, and MLP. Which algorithm is the best?

The Story

gif by Giphy

It all started with my young cousin, who was lost in his own world, doodling in his drawing book. I asked him what is he doing. He replied he is making a cat; it didn’t look like a cat at all. He asked me to play a game with him in which I would recognize what he is drawing. It was fun for some time, but soon, I got bored.

I didn’t want to hurt his feelings by not playing with him, so I used my computer vision and python skills to make a doodle classifier. Now the question was how will I implement it; There are hundreds of methods to classify a doodle, and I had to pick the most accurate one, which takes the least amount of time to train, occupies less memory, requires less processing power, and doesn’t require TB’s of data to give meaningful results.

After some web surfing, I found the top 5 algorithms that can complete this task in the best way possible, but each website I visited told a different story. Some were saying CNN is the best, while others said mobile-net is best. I thought — well, let’s test out all of them. I found a great dataset containing lots of doodles with their labels in a Kaggle competition available for free to download.

Image classification is a vast topic as there are tons of algorithms available to use for various applications. Image classification is so vast and ever-changing that every day new algorithms are being created and new applications of it are emerging. Thus, it was difficult for me to handpick a few algorithms given that even they have hundreds of variations. So, this post will investigate which algorithm performs best for doodle classification specifically.

I will also test how reliable these algorithms are in other situations such as handwritten character classification, number plate recognition, etc.

What Are Covered

- A brief introduction to ML techniques used in research

- Evaluation Metrics

- Details on parameters chosen for research

- Results

- Limitations and Conclusion

Let’s Start With a Brief Intro to Machine Learning Algorithms Used

There are thousands of algorithms for doodle classification, here I have shortlisted a few famous ones which I will explore -

1) Random Forest

We can use the random forest algorithm for both classification and regression. It is like decision trees, except it uses hundreds of decision trees to come up to a conclusion. A decision tree separates data into various classes based on similar features. For each data point, it checks if it has a certain feature, most common data falls under the same class. In the random forest algorithm, we take many decision trees and randomly give them fewer features to check from, ex if we have 100 features, we might give 10 random features to each tree. Some trees will assign incorrect classes, but many will be right! We take the majority and create our classification model.

Research paper on random forest algorithm: Leo Breiman A great article on random forest algorithms by Tony Yiu

2) KNN

K-nearest neighbors (KNN) can be used both as a classification and a regression algorithm. In KNN, data points are separated into several classes to predict the classification of a new sample point. To achieve this task, it uses distance formula to calculate distances between various data points, based on this distance, it then defines region boundaries for each class. Any new data point will fall into one of these regions and will be assigned that class.

Research paper on KNN: Gongde Guo

3) MLP

Multi-layer perception (MLP) is a form of feed-forward artificial neural network. MLP has many layers, but only a logistic function in its hidden layers and a softmax function at the output layer. The algorithm takes in a single big vector as input and performs matrix operations on the input layer and hidden layers, then the result goes through a logistic function, and its output goes through another hidden layer. This process is repeated until the network reaches the output layer, where a single output is produced using the softmax function.

Research paper on MLP: Popescu Marius

4) CNN

Convolution neural networks (CNN) is one of the easiest to implement deep learning computer vision algorithm. First, it takes an input image of a given size and creates multiple filters/feature detectors (which is initially a randomly generated matrix of the given size) for it, a filter aims to recognize certain patterns in an image, the filter is moved across the image and matrix multiplication is done between the matrix and the image. This filter slides throughout the image to gather more features, we then use an activation function mostly a rectified linear unit function to increase non-linearity or preserve only important features, then we use max-pooling function to add up all the values in a given matrix size (ex- if we choose a matrix of 4 then it will add all the 4 values to create 1 value), thus reducing the size of our output to make it faster. The last step is to flatten the final matrix which is passed as input to a basic ANN (artificial neural network) and get class predictions.

Research paper on CNN: Keiron Teilo O’Shea

5) Mobile-Net

The mobile-Net architecture uses depth-wise separable convolution, which comprises a depth-wise convolution and a point-wise convolution. Depth wise convolution is a channel-wise Dk Dk spatial convolution, suppose we have 3 channels (R, G, B) in an image then we will have 3DkDk spatial convolution. In point-wise convolution we have kernel size of 11M, where M is the number of channels in depth wise convolution, in this case, it’s 3. Therefore, we have one kernel of size 113; we iterate this one kernel through our 3DkDk output to get DkDk1 output. We can create N 113 kernels that output a DkDk1 image each, to get a final image of shape DkDk*N. The final step is to add depth wise convolution to pointwise convolution. This type of architecture reduces training time as we have lesser parameters to tune while having a minor effect on accuracy.

Research paper on Mobile net: Andrew G. Howard

Evaluation Metrics

Above are the samples of doodles used for this research.

I trained my machine learning models on the Kaggle quickdraw dataset that comprises 50 million images of different types of doodles. I divided this huge dataset into two parts: 35000 images for training, and 15000 for testing. I then calculated the training time for each algorithm on randomly chosen 5 different types of doodles. On the test set, I calculated mean average precision, accuracy, and recall for each algorithm.

Evaluation Metrics-

Training Time

Mean Average Precision

Accuracy

Recall

Details on Parameters Chosen

1) Random forest

n_estimators — number of decision trees in a forest. [10,50,100]

max_features — Features to be considered while splitting [‘auto’,’sqrt’]

max_depth — the maximum number of levels in the tree [2,4,6,8,10]

n_jobs — number of processes running in parallel, usually set as -1 to do maximum processes at a time.

criterion — it is a way to calculate loss and thus update the model to make the loss lesser and lesser. [‘entropy’,’cross_validation’]

I used ‘auto’ as the max_feature; 8 as the max_depth; -1 as n_jobs and ‘entropy’ as my criterion because they usually give the best results.

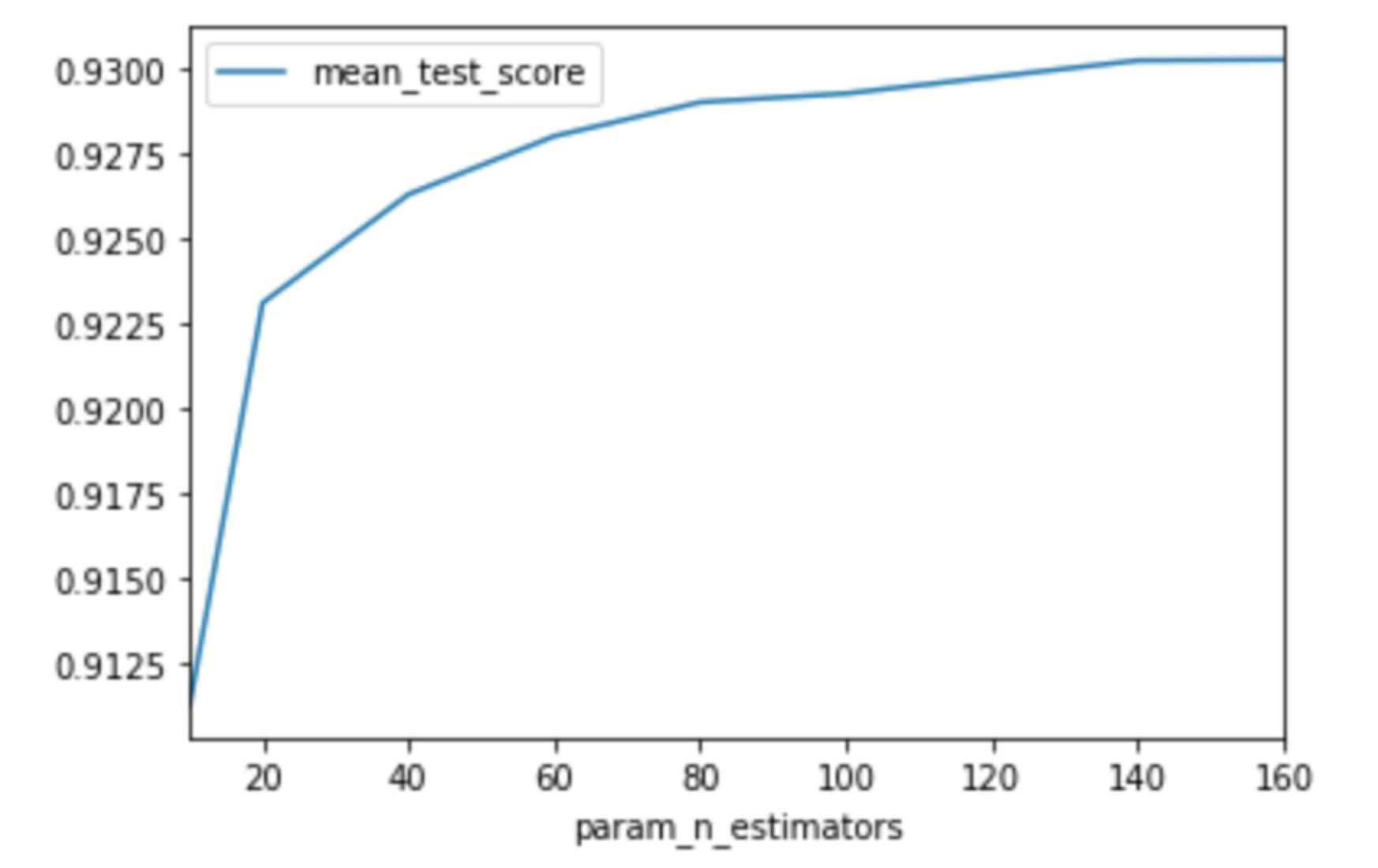

However, to find out the optimal number of trees, I used GridSearchCV. It tries all the given parameter combinations and creates a table to show results. As can be seen from the figure, there is no significant increase in the test score after 80 trees. Thus, I decided to train my classifier on 80 trees.

However, to find out the optimal number of trees, I used GridSearchCV. It tries all the given parameter combinations and creates a table to show results. As can be seen from the figure, there is no significant increase in the test score after 80 trees. Thus, I decided to train my classifier on 80 trees.

2) K-Nearest Neighbors (KNN)

n_neighbors — number of nearest data points to compare [2,5,8]

n_jobs — number of processes running in parallel, usually set as -1 to do maximum processes at a time

I did not change any default parameters for this model because they would give the best result.

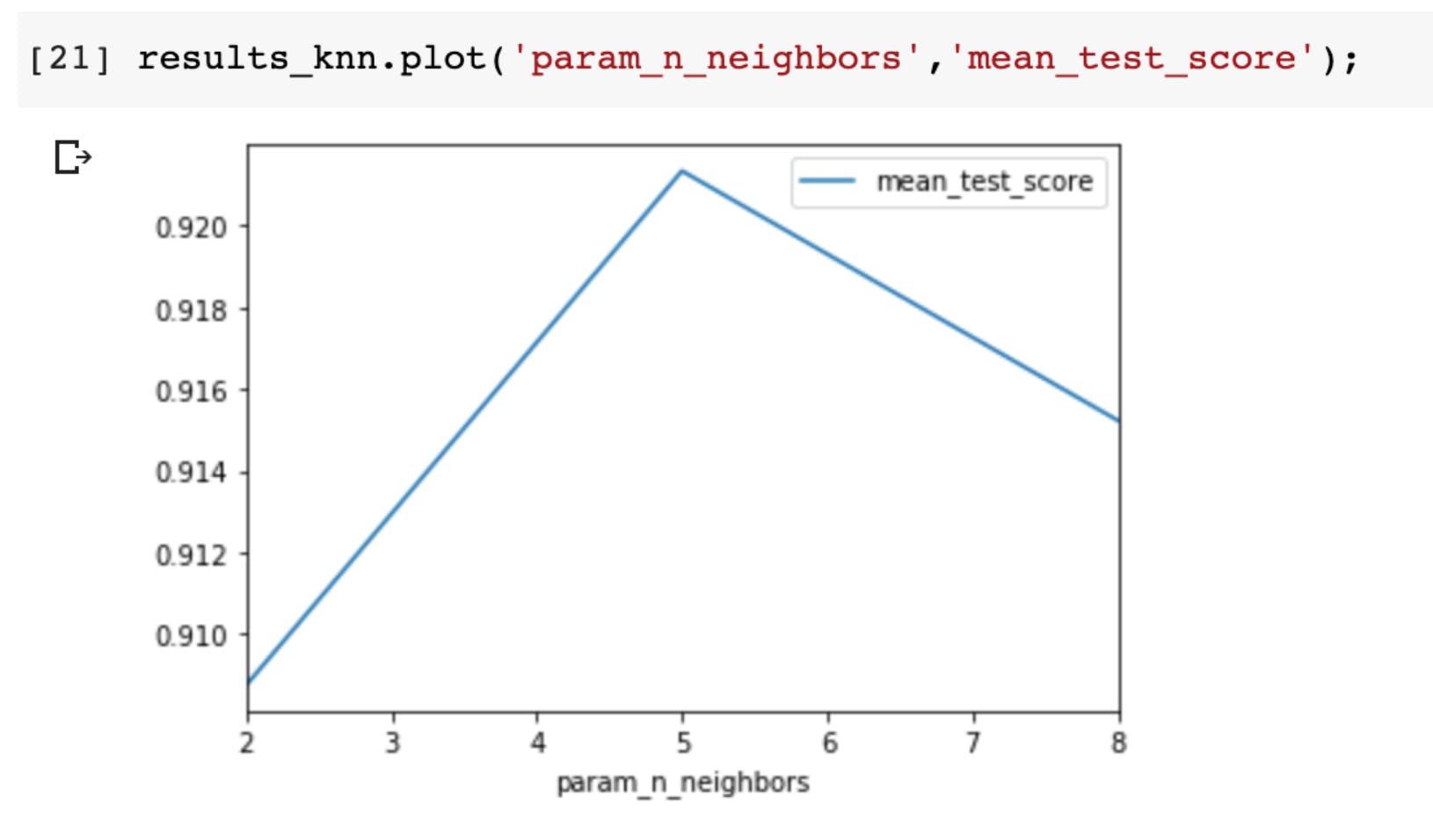

However, to find the optimum number of n_neighbors, I have used GridSearchCV, and this is the graph I got:

According to the graph, the test score declines after 5 n_neighbors, which means 5 is the optimum number of neighbors.

3) Multi-Layer Perceptron (MLP)

alpha- Most commonly known as the learning rate, it tells the network how fast to adjust the gradient. [0.01, 0.0001, 0.00001]

hidden_layer_sizes — It is a tuple of values that consists of the number of hidden nodes at each layer. [(50,50), (100,100,100), (750,750)]

activation — A function which provides value to important characteristics in an image and deletes the irrelevant information. [‘relu’,’tanh’,’logistic’].

solver — Also known as the optimizer, this parameter tells the network which technique to use for training the weights in a network. [‘sgd’,’adam’].

batch_size — It is the number of images to be processed at once. [200,100,200].

I have chosen activation as ‘relu’ and solver as ‘adam’ because these parameters give the best result.

However, to choose the number of hidden layers and alpha, I have used GridSearchCV.